Lesson 5 Running a Simulation Study

It’s nearly impossible to overstate the value that economists ascribe to cleverness. Like most obsessions, this one is not altogether healthy.

My general philosophy in life is never to rely on being clever; instead I want to rely on being thorough and having a justifiable workflow.

In economics and statistics, simulation is a superpower. It helps us to understand our models, check for mistakes, and make unexpected connections. If you'll pardon my continuation of the Swiss Army Knife metaphor, simulation is the knife: arguably the most useful tool in your toolbox. Riffing on the quotes from above, simulation is both a substitute for and a complement to cleverness. In this lesson, we'll learn the basics of carrying out a simulation study in R using the following key commands:

sample()rbinom()rnorm()set.seed()replicate()expand.grid()Map()

We'll then apply what we've learned to shed some light on a simple but surprisingly subtle statistical error that led an entire literature astray for nearly two decades. Before we dive into the code, let me set the stage for the example that appears at the end of this lesson.

5.1 Is the Hot Hand an Illusion?

If you've read 2002 Nobel Laureate Daniel Kahneman's best-selling book Thinking Fast and Slow, you may remember this passage about the hot hand illusion, a supposed illustration of the human tendency to see patterns in random noise:

Amos [Tversky] and his students Tom Gilovich and Robert Vallone caused a stir with their study of misperceptions of randomness in basketball. The "fact" that players occasionally acquire a hot hand is generally accepted by players, coaches, and fans. The inference is irresistible: a player sinks three or four baskets in a row and you cannot help forming the causal judgment that this player is now hot, with a temporarily increased propensity to score. Players on both teams adapt to this judgment—teammates are more likely to pass to the hot scorer and the defense is more likely to doubleteam. Analysis of thousands of sequences of shots led to a disappointing conclusion: there is no such thing as a hot hand in professional basketball, either in shooting from the field or scoring from the foul line. Of course, some players are more accurate than others, but the sequence of successes and missed shots satisfies all tests of randomness. The hot hand is entirely in the eye of the beholders, who are consistently too quick to perceive order and causality in randomness. The hot hand is a massive and widespread cognitive illusion.

The research that Kahneman mentions was published in a famous paper by Gilovich, Vallone & Tversky (1985), and later summarized for a general audience in Gilovich & Tversky (1989). The abstract of the original paper says it all:

Basketball players and fans alike tend to believe that a player's chance of hitting a shot are greater following a hit than following a miss on the previous shot. However, detailed analyses of the shooting records of the Philadelphia 76ers provided no evidence for a positive correlation between the outcomes of successive shots. The same conclusions emerged from free-throw records of the Boston Celtics, and from a controlled shooting experiment with the men and women of Cornell's varsity teams. The outcomes of previous shots influenced Cornell players' predictions but not their performance. The belief in the hot hand and the "detection" of streaks in random sequences is attributed to a general misconception of chance according to which even short random sequences are thought to be highly representative of their generating process.

Between 1985 and 2011, when Kahneman's book was published, this result was replicated numerous times under a variety of different conditions, making it one of the better-documented biases in human decision-making. But it turns out that another source of bias was at play here, one of the statistical variety.

In a recent issue of Econometrica, Miller & Sanjurjo (2018) point out a subtle but consequential error in the statistical tests used in the hot hand literature. It turns out that these tests were biased against detecting evidence of a hot hand, even if it did in fact exist. Correcting this mistake and re-analyzing Gilovich, Vallone and Tversky's original dataset "reveals significant evidence of streak shooting, with large effect sizes." In response to this paper, the literature has now shifted to trying to estimate the size of the effect.13 As Benjamin (2019) points out it's still an open question whether sports fans over-estimate the importance of the hot hand phenomenon, but it's certainly not a cognitive illusion.14

There are some helpful lessons that we can draw from this episode. First, we all make mistakes--even Nobel Laureates! Second, successful replications tell us less than we might hope: they could easily reproduce the bias of the original study. But in my view, the real lesson of the hot hand affair is much simpler: you should always run a simulation study. The probabilistic error that led so many researchers to draw the wrong conclusion about the hot hand is really quite subtle.15 But at the same time, anyone who knows basic programming could have detected the mistake in half an hour if they had only bothered to look.

5.2 Drawing Random Data in R

Before we can use simulations to study the illusion of the hot hand illusion, we need to review the basics of drawing random data in R. We'll examine the functions sample(), rbinom() and set.seed() in detail. I'll also point you to a large number functions for simulating from well-known probability distributions in R. Finally, you'll have a chance to practice what you've learned by solving a few short exercises.

5.2.1 sample()

R has many helpful built-in functions for making simulated random draws. The simplest is sample(), which makes size random draws without replacement from a vector x. To test this out, I'll create a very simple vector

my_vector <- c('there', 'is', 'no', 'largest', 'integer')The following line of code makes two draws without replacement from my_vector

sample(x = my_vector, size = 2) # without replacement## [1] "is" "no"If I run the same line of code again, I may not get the same result: it's random!16

sample(x = my_vector, size = 2) # without replacement## [1] "there" "integer"To draw with replacement, set replace = TRUE

sample(x = my_vector, size = 7, replace = TRUE) # with replacement## [1] "no" "is" "integer" "is" "integer" "there" "no"As usual in R, the argument names x, size, and replace are optional. But it is considered good coding style to explicitly supply an argument name whenever we're overriding a function's default behavior. This makes it easier for anyone reading our code to understand what's happening. Since sample() defaults to making draws without replacement, it's a good idea to write replace = TRUE rather then simply TRUE. But even without writing replace =, the code will still work as long as we supply all of the arguments in the correct order:

sample(my_vector, 7, TRUE) # bad style## [1] "largest" "is" "is" "largest" "is" "no" "largest"sample(my_vector, 7, replace = TRUE) # good style## [1] "there" "there" "is" "there" "largest" "integer" "there"5.2.2 Probability Distributions in R

As a programming language targeted at statistical applications, R supplies built-in functions for all of the most common probability distributions.17

These functions follow a consistent naming convention. They being with either d, p, q, or r and are followed by an abbreviated name for a particular probability distribution. The prefix d denotes a density function (or mass function for a discrete distribution); p denotes a cumulative distribution function (CDF), q denotes a quantile function, and r denotes a function for making random draws from a particular distribution. For example: dunif() gives the probability density function of a uniform random variable, pnorm() gives the CDF of a normal random variable, qchisq() gives the quantile function of a Chi-squared, and rbinom allows us to make random draws from a Binomial distribution. The following table gives a full list of the relevant commands.

| R commands | Distribution |

|---|---|

d/p/q/rbeta |

Beta |

d/p/q/rbinom |

Binomial |

d/p/q/rcauchy |

Cauchy |

d/p/q/rchisq |

Chi-Squared |

d/p/q/rexp |

Exponential |

d/p/q/rf |

F |

d/p/q/rgamma |

Gamma |

d/p/q/rgeom |

Geometric |

d/q/p/rhyper |

Hypergeometric |

d/p/q/rlogis |

Logistic |

d/p/q/rlnorm |

Log Normal |

d/p/q/rnbinom |

Negative Binomial |

d/p/q/rnorm |

Normal |

d/p/q/rpois |

Poisson |

d/p/q/rt |

Student's t |

d/p/q/runif |

Uniform |

d/p/q/rweibull |

Weibull |

There's a single help file for all of the d/p/q/r functions for a particular distribution. For example, if you enter ?dbeta at the console you'll be shown the help files for dbeta(), pbeta(), qbeta(), and rbeta().

To get a feel for how these functions work, let's take a look at rbinom(), the function for drawing from a Binomial distribution. Recall that a Binomial\((m,p)\) random variable equals the number of heads in \(m\) independent tosses of a coin with \(\mathbb{P}(\text{Heads})=p\). Or to use a bit of probability jargon, it equals the number of successes in \(m\) independent Bernoulli trials, each with probability of success \(p\).18 If \(X\) is a Binomial random variable with parameters \(m\) and \(p\), traditionally written as \(X \sim \text{Binomial}(m, p)\) then \(X\) must take on a value in the set \(\{0, 1, 2, ..., m\}\) and the probability that it takes on a particular value \(x\) in this set is

\[

\mathbb{P}(X = x) = \binom{m}{x} p^x (1 - p)^{m-x}

\]

The function rbinom() makes random draws with the probabilities given by this formula. Its takes three arguments: size is the number of trials, \(m\) in the formula, prob is the probability of success, \(p\) in the formula, and n is the desired number of Binomial draws. For example, we can make a single draw from a Binomial\((m = 10, p =1 /2)\) distribution as follows

rbinom(n = 1, size = 10, prob = 0.5)## [1] 6and fifteen draws from the same distribution by changing n to 10

rbinom(n = 15, size = 10, prob = 0.5)## [1] 7 1 4 6 8 6 3 5 6 4 2 4 5 4 4It's important not to confuse n with size. The former tells R how many Binomial draws to make. The latter tells R the value of the parameter \(m\) of the Binomial\((m, p)\) distribution.

Perhaps you remember that if \(X \sim \text{Binomial}(m, p)\) then \(\mathbb{E}[X] = mp\) and \(\text{Var}= mp(1-p)\). We can approximate these results numerically by simulating a large number of draws, say 5000, from a Binomial distribution:

m <- 20

p <- 0.25

n_sims <- 5000

sim_draws <- rbinom(n_sims, m, p)and then comparing the theoretical value for \(\mathbb{E}(X)\) to a simulation-based approximation:

c(EV_Theoretical = m * p,

EV_Simulation = mean(sim_draws))## EV_Theoretical EV_Simulation

## 5.000 5.016and similarly for \(\text{Var}(X)\)

c(Var_Theoretical = m * p * (1 - p),

Var_Simulation = var(sim_draws))## Var_Theoretical Var_Simulation

## 3.750000 3.605665Reassuringly, our simulation results are very close to the theoretical values. They would be even closer if we used a larger value for n_sims.

5.2.3 set.seed()

A key theme of this book is the importance of reproducible research. Anyone else who wants to check your work should be able to obtain exactly the same results as you did by running your code. But this seems to be at odds with the idea of simulating random data. For example, if I run rbinom(10, 4, 0.6) repeatedly, I'll most likely get different results each time:

rbinom(10, 4, 0.6)## [1] 3 1 3 1 2 2 2 3 4 2rbinom(10, 4, 0.6)## [1] 1 2 1 2 1 2 3 3 4 3rbinom(10, 4, 0.6)## [1] 2 3 1 2 4 4 0 3 3 3The function set.seed() allows us to ensure that we obtain the same simulation draws whenever we re-run the same simulation code. To use it, we simply choose a seed, any integer between negative and positive \((2^{31} - 1)\), and supply it as an argument to set.seed(). Simulation draws made on a computer aren't really random: they're only pseudo-random. This means that they "look" random and pass statistical tests for randomness but are in fact generated by a completely deterministic algorithm. Setting the seed sets the initial condition of the pseudorandom number generator. Because the algorithm is deterministic, the same initial condition always leads to the same results. This is what allows us to replicate our simulation draws. Each time we make another draw, the seed changes. But we can always return it to its previous state using set.seed(). For example, suppose I set the seed to 1. and re-run my code from above as follows

set.seed(1)

x1 <- rbinom(10, 4, 0.6)

x1## [1] 3 3 2 1 3 1 1 2 2 4If I run rbinom(10, 4, 0.6) again, I will most likely not get the same result, because the state of the pseudorandom number generator has changed:

x2 <- rbinom(10, 4, 0.6)

x2## [1] 3 3 2 3 2 2 2 0 3 2identical(x1, x2) # safe/reliable way to test if two objects are exactly equal## [1] FALSEbut if I reset the seed to 1 I'll obtain exactly the same result as before:

set.seed(1)

x3 <- rbinom(10, 4, 0.6)

x3## [1] 3 3 2 1 3 1 1 2 2 4identical(x3, x1)## [1] TRUEWhenever you write simulation code, start by choosing a seed and adding the line set.seed(MY-SEED-GOES-HERE) to the top of your R script. You'll often see people use set.seed(12345) or set.seed(54321). When I'm not feeling lazy, I like to generate a truly random number to use as my seed. The website random.org provides free access to bona fide random numbers generated from atmospheric noise. The "True Random Number Generator" on the top right of their main page allows you to make uniform integer draws on a range from "Min" to "Max." Using the widest possible range, \(\pm1\times 10^9\), I generated the seed 420508570 which I'll use in the following exercises.

5.2.4 Exercises

Set your seed to 420508570 at the start of your solution code for each of these exercises to obtain results that match the solutions.

- Run

sample(x = my_vector, size = 10). What happens and why?

R will throw an error. You can't make ten draws without replacement from a set of five objects:

set.seed(420508570)

sample(x = my_vector, size = 10)## Error in sample.int(length(x), size, replace, prob): cannot take a sample larger than the population when 'replace = FALSE'- Write a line of code that makes five draws without replacement from the set of numbers \(\{1, 2, 3, ..., 100\}\).

set.seed(420508570)

sample(1:100, 5)## [1] 100 67 51 44 12- Create a vector of thirty elements called

urnthat represents an urn containing ten blue balls and twenty red balls. Draw five balls with replacement fromurnand store the draws in a vector calleddraws. Then write a line of code to count up the number of blue balls indraws.

Use rep() and c() to construct urn. Use == and sum() to count up the number of blue balls in draws. See the relevant help files for details, e.g. ?rep.

set.seed(420508570)

urn <- c(rep('blue', 10), rep('red', 20))

draws <- sample(urn, 5, replace = TRUE)

draws## [1] "blue" "blue" "red" "red" "red"sum(draws == 'blue')## [1] 2- Make 1000 draws from a normal distribution with mean 5 and variance 4 and store them in a vector called

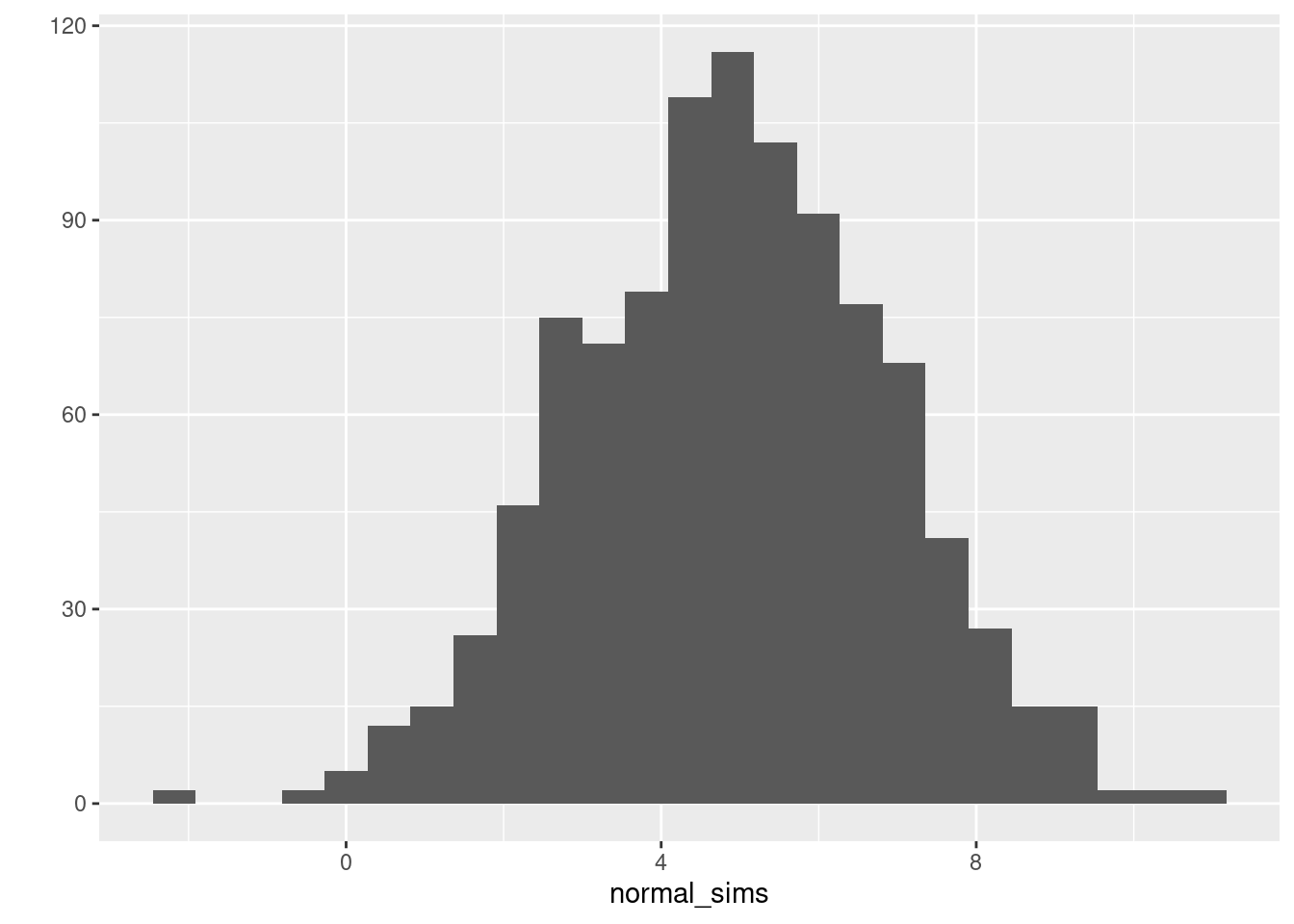

normal_sims. Calculate the mean and variance of your draws, and plot a histogram.

Consult the help file for rnorm() paying close attention to the fact that R specifies the normal distribution in terms of a mean and standard deviation rather than a mean and variance. You can plot a histogram with any number of functions: e.g. the base R function hist() or qplot() from the ggplot2 package.

set.seed(420508570)

normal_sims <- rnorm(1000, 5, 2) # Variance = 4; Standard Dev. = 2

mean(normal_sims)## [1] 4.895722var(normal_sims)## [1] 3.912262ggplot2::qplot(normal_sims, bins = 25)

- There is no built-in R function called

rbern()for simulating draws from the Bernoulli Distribution with probability of success \(p\). Write one of your own and use it to make ten Bernoulli(0.8) draws. Your functionrbern()should take two arguments: the number of drawsnand the probability of successp.

There are various ways to do this. The simplest is by setting the arguments of rbinom() appropriately.

rbern <- function(n, p) {

# Make n random draws from a Bernoulli(p) distribution

rbinom(n, size = 1, prob = p)

}

set.seed(420508570)

rbern(10, 0.8)## [1] 1 1 1 1 0 1 1 1 1 15.3 The Skeleton of a Simulation Study

While the specific details will vary, nearly every simulation study has the same basic structure:

- Generate simulated data.

- Calculate an estimate from the simulated data.

- Repeat steps 1 and 2 many times, saving each of the estimates.

- Summarize the results.

Thinking in terms of this structure helps us to write code that is easier to understand, easier to generalize, and faster to run. The key is to break these steps down into functions that carry out a single, well-defined task. Generally these will include:

- A function to generate simulated data.

- A function to calculate an estimate from the data.

- A function that repeatedly calls i. and ii. and summarizes the results.

This may sound a bit abstract, so in the remainder of this section we'll walk through the details in a simple example: estimating the bias of the maximum likelihood estimator for the variance of a normal distribution. Along the way we'll explore three extremely helpful R functions for carrying simulation studies: replicate(), expand.grid(), and Map(). In the next section you'll apply what you've learned to the hot hand example.

5.3.1 A Biased Estimator of \(\sigma^2\)

My introductory statistics students often ask me why the sample variance, \(S^2\), divides by \((n-1)\) rather than the sample size \(n\): \[ S^2 \equiv \frac{1}{n-1}\sum_{i=1}^n (X_i - \bar{X}_n)^2 \] The answer is that dividing by \((n-1)\) yields an unbiased estimator: if \(X_1, ..., X_n\) are a random sample from a population with mean \(\mu\) and variance \(\sigma^2\), then \(\mathbb{E}[S^2] = \sigma^2\). So what would happen if we divided by \(n\) instead? Consider the estimator \(\widehat{\sigma}^2\) defined by \[ \widehat{\sigma}^2 \equiv \frac{1}{n}\sum_{i=1}^n (X_i - \bar{X}_n)^2. \] If \(X_i \sim \text{Normal}(\mu, \sigma^2)\) then \(\widehat{\sigma}^2\) is in fact the maximum likelihood estimator for \(\sigma^2\). With a bit of algebra, we can show that \(\mathbb{E}[\widehat{\sigma}^2] = (n-1)\sigma^2/n\) which clearly does not equal the population variance.19 It follows that \[ \text{Bias}(\widehat{\sigma}^2) \equiv \mathbb{E}[\widehat{\sigma}^2 - \sigma^2] = -\sigma^2/n \] so \(\widehat{\sigma}^2\) is biased downwards. Because the bias goes to zero as the sample size grows, however, it is still a consistent estimator of \(\sigma^2\).

Another way to see that \(\widehat{\sigma}^2\) is biased is by carrying out a simulation study. To do this, we generate data from a distribution with a known variance and calculate \(\widehat{\sigma}^2\). Then we generate a new dataset from the same distribution and again calculate the corresponding value of \(\widehat{\sigma}^2\). Repeating this a large number of times, we end up with many estimates \(\widehat{\sigma}^2\), each based on a dataset of the same size drawn independently from the same population. This collection of estimates gives us an approximation to the sampling distribution of \(\widehat{\sigma}^2\). Using this approximation, we can get a good estimate of \(\text{Bias}(\widehat{\sigma}^2)\) by comparing the sample mean of our simulated estimates \(\widehat{\sigma}^2\) to the true variance \(\sigma^2\).

5.3.2 draw_sim_data()

The first thing we need is a function to generate simulated data. Let's draw the \(X_1, ..., X_n\) from a normal distribution with mean zero and variance s_sq. To do this, we write a simple R function as follows:

draw_sim_data <- function(n, s_sq) {

rnorm(n, sd = sqrt(s_sq))

}The nice thing about writing such a function is that we can test that it's working correctly. For example, suppose you were worried that draw_sim_data does not in fact generate n draws from a normal distribution with mean zero and variance s_sq. Then you could simply draw a large sample and check! Here I'll verify that draw_sim_data() returns a vector of the expected length, with the desired mean and variance, drawn from normal distribution.20 Everything works as expected:

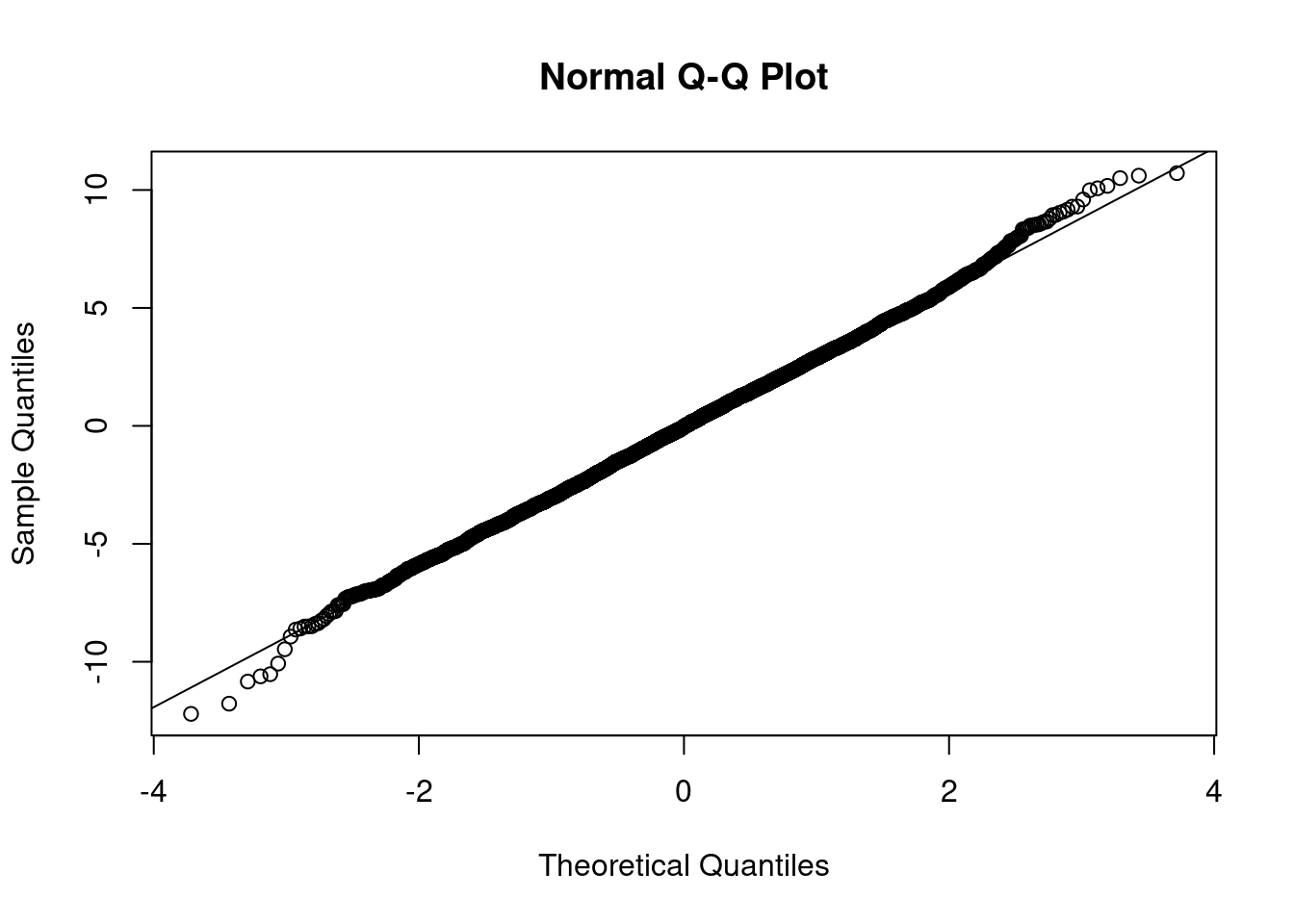

set.seed(420508570)

test_sims <- draw_sim_data(5000, 9)

length(test_sims)## [1] 5000mean(test_sims)## [1] -0.04620928var(test_sims)## [1] 8.832994qqnorm(test_sims)

qqline(test_sims)

5.3.3 get_estimate()

The next step is to write a function that calculates \(\widehat{\sigma}^2\). We can do this as follows:

get_estimate <- function(x) {

sum((x - mean(x))^2) / length(x) # divides by n not (n-1)

}Again it's a good idea to test your code before proceeding. There are several tests we could consider running. First, if all the elements of x are the same then get_estimate() should return zero because (x - mean(x)) will simply be a vector of zeros. Everything looks good:

get_estimate(rep(5, 25))## [1] 0get_estimate(rep(0, 10))## [1] 0Second, get_estimate() should not in general give the same result as var(), R's built-in function for the sample variance. This is because the latter divides by \(n\) rather than \((n-1)\). But if \(n\) is very large, this difference should become negligible. Again, everything works as expected:

set.seed(420508570)

sim_small <- draw_sim_data(5, 1)

c(sigma_hat_sq = get_estimate(sim_small), Sample_Var = var(sim_small))## sigma_hat_sq Sample_Var

## 0.1710749 0.2138436sim_big <- draw_sim_data(5000, 1)

c(sigma_hat_sq = get_estimate(sim_big), Sample_Var = var(sim_big))## sigma_hat_sq Sample_Var

## 0.9814408 0.98163715.3.4 get_bias()

Now we're ready to actually carry out our simulation study. The final step is to write a function called get_bias() that repeatedly calls draw_sim_data() and get_estimate(), stores the resulting estimates \(\widehat{\sigma}^2\) and calculates a simulation estimate of the bias. Compared to the functions from above, this one will be more complicated, so I'll explain it in steps. First the code:

get_bias <- function(n, s_sq, n_reps = 5000) {

draw_sim_replication <- function() {

sim_data <- draw_sim_data(n, s_sq)

get_estimate(sim_data)

}

sim_replications <- replicate(n_reps, draw_sim_replication())

mean(sim_replications - s_sq)

}The function get_bias() takes three arguments: n is the sample size for each replication of the simulation experiment, s_sq is the true population variance from which we will simulate normal data, and n_reps is the number of simulation replications, i.e. the number of times that we want to repeat the simulation. The final argument, n_reps is optional: if you call get_bias() and supply only the first two arguments, R will set n_reps equal to the default value of 5000.

The first step inside of get_bias() constructs a function called draw_sim_replication() that doesn't take any input arguments. This may seem strange: I'll explain it in a moment. For now, focus on the steps that draw_sim_replication() carries out. It first runs draw_sim_data(n, s_q) and stores the result in a vector called sim_data. Next it feeds sim_data as an input to get_estimate(). In other words, it carries out one replication of our simulation experiment. But how does the call to draw_sim_data() "know" which values to use for n and s_sq given that draw_sim_replication() doesn't take any input arguments? The key is that draw_sim_replication() is created inside of another function: get_bias(). When draw_sim_replication() encounters a reference to n and s_sq, it substitutes the values that were supplied as arguments to get_bias().

Here's another way of looking at draw_sim_replication(). We want to be able to run our simulation study for different values of n and s_sq. After we tell get_bias() our desired values of n and s_sq, it constructs a function for us called draw_sim_replication() that hard codes these particular parameter values. From this point on, calling draw_sim_replication() does "the right thing" without our having to explicitly specify n and s_sq.

The next step of get_bias() uses the function replicate() to repeatedly call the function draw_sim_replication() a total of n_reps times. The results are stored in a vector called sim_replications. In essence, replicate() is shorthand for a common way of using a for loop. In the following example, x and y will be identical. But constructing x requires much more work: we first need to set up an empty vector, and then explicitly loop over it. In contrast, replicate() does all of this behind the scenes to construct y:

do_something <- function() {

return(42)

}

x <- rep(NA, 50)

for(i in 1:50) {

x[i] <- do_something()

}

y <- replicate(50, do_something())

identical(x, y)## [1] TRUEFinally, get_bias() uses the simulation replications stored in the vector sim_replications to approximate the bias of \(\widehat{\sigma}^2\) by comparing them to the true value of \(\sigma^2\), namely s_sq. It does this by computing the simulation analogue of \(\mathbb{E}[\widehat{\sigma}^2 - \sigma^2]\), which is simply mean(sim_replications - s_sq).

5.3.5 Running the Simulation Study

Now we're ready to run our simulation study: we simply need to call get_bias() with our desired values of n and s_sq, for example:

set.seed(420508570)

get_bias(n = 5, s_sq = 1)## [1] -0.2007312It works! Up to simulation error, this result agrees with the theoretical bias of \(-\sigma^2/n = -1/5\). To see that this isn't simply a fluke, we could try different values of n and s_sq. Again, the results agree with the theoretical values:

set.seed(420508570)

c(theoretical = -1/3, simulation = get_bias(3, 1))## theoretical simulation

## -0.3333333 -0.34121765.3.6 expand.grid() and Map()

Now we have a function get_bias() that can approximate the bias of \(\widehat{\sigma}^2\) for any values of n and s_sq that we care to specify. But what if we want to carry out a simulation study over a range of values for n and s_sq? One way to do this is with a pair of nested for loops: one that iterates over different values of n and another that iterates over different values of s_sq. But this isn't a great strategy for two reasons. First, loops within loops tend to be slow in R. Second, the book-keeping required to implement this strategy is a bit involved. Fortunately there's a much better way: use expand.grid() and Map().

First we'll set up a grid of values for n and s_sq:

n_grid <- 3:5

n_grid## [1] 3 4 5s_sq_grid <- seq(from = 1, to = 3, by = 0.5)

s_sq_grid## [1] 1.0 1.5 2.0 2.5 3.0Now suppose that we want to run get_bias() for every combination of values in n_grid and s_sq_grid. Using the built-in R function expand.grid() we can easily construct a data frame whose rows contain all of these combinations:

parameters <- expand.grid(n = n_grid, s_sq = s_sq_grid)

parameters## n s_sq

## 1 3 1.0

## 2 4 1.0

## 3 5 1.0

## 4 3 1.5

## 5 4 1.5

## 6 5 1.5

## 7 3 2.0

## 8 4 2.0

## 9 5 2.0

## 10 3 2.5

## 11 4 2.5

## 12 5 2.5

## 13 3 3.0

## 14 4 3.0

## 15 5 3.0The next step is to evaluated get_bias() repeatedly, once for every combination of parameter values stored in the rows of parameters. The Map() function makes this easy:

set.seed(420508570)

bias <- Map(get_bias, n = parameters$n, s_sq = parameters$s_sq)Much like replicate(), Map() is shorthand for a common kind of for loop. In this case we loop over the rows of parameters. The first argument to Map() is the name of the function that we want to call repeatedly, in our case get_bias(). The remaining arguments are vectors of values. These are the arguments that Map() passes to get_bias(). The result of running the above code is a list of value, one for each row of parameters.

head(bias)## [[1]]

## [1] -0.3412176

##

## [[2]]

## [1] -0.2546259

##

## [[3]]

## [1] -0.2005197

##

## [[4]]

## [1] -0.5007384

##

## [[5]]

## [1] -0.3816447

##

## [[6]]

## [1] -0.292554length(bias)## [1] 15For example, the first element of bias corresponds to get_bias(3, 1). By setting the same seed and running this command "manually" we can verify that everything works as expected:

set.seed(420508570)

identical(get_bias(3, 1), bias[[1]])## [1] TRUEThis pattern using expand.grid() and Map() is extremely flexible. In our example, get_bias() returns a scalar so bias is just a list of numbers. But more generally Map() can return a list that contains any kind of object at all. Here's a slightly more interesting example. The function get_bias_and_var() is a very slight modification of get_bias() from above that returns a list of two named elements: bias and variance

get_bias_and_var <- function(n, s_sq, n_reps = 5000) {

draw_sim_replication <- function() {

sim_data <- draw_sim_data(n, s_sq)

get_estimate(sim_data)

}

sim_replications <- replicate(n_reps, draw_sim_replication())

list(bias = mean(sim_replications - s_sq),

variance = var(sim_replications))

}We can use this function to calculate both the bias and variance of the MLE \(\widehat{\sigma}^2\) as follows

set.seed(420508570)

bias_and_variance <- Map(get_bias_and_var, n = parameters$n, s_sq = parameters$s_sq)

bias_and_variance[1:3]## [[1]]

## [[1]]$bias

## [1] -0.3412176

##

## [[1]]$variance

## [1] 0.4554343

##

##

## [[2]]

## [[2]]$bias

## [1] -0.2546259

##

## [[2]]$variance

## [1] 0.3643993

##

##

## [[3]]

## [[3]]$bias

## [1] -0.2005197

##

## [[3]]$variance

## [1] 0.32433155.3.7 Formatting the Results

We've carried out our simulation experiment, but the results are a bit messy. The parameter values are stored in a data frame called parameters and the biases and variances are stored in a list called bias_and_var. Let's format things a bit more nicely. If you have a list my_list whose elements are "rows" and you want to bind them together into a data frame, you can use the somewhat inscrutable command do.call(rbind, my_list). For example:

do.call(rbind, bias_and_variance)## bias variance

## [1,] -0.3412176 0.4554343

## [2,] -0.2546259 0.3643993

## [3,] -0.2005197 0.3243315

## [4,] -0.5007384 0.9496033

## [5,] -0.3816447 0.8616167

## [6,] -0.292554 0.7173205

## [7,] -0.6609821 1.781236

## [8,] -0.5061243 1.538961

## [9,] -0.3953514 1.326648

## [10,] -0.8513285 2.705087

## [11,] -0.6122964 2.467789

## [12,] -0.4727481 2.014822

## [13,] -0.9570253 4.124553

## [14,] -0.7513177 3.201657

## [15,] -0.567449 2.916767Now we'll overwrite bias_and_var with the above and bind its columns, cbind(), with those of parameters

bias_and_variance <- do.call(rbind, bias_and_variance)

sim_results <- cbind(parameters, bias_and_variance)

sim_results## n s_sq bias variance

## 1 3 1.0 -0.3412176 0.4554343

## 2 4 1.0 -0.2546259 0.3643993

## 3 5 1.0 -0.2005197 0.3243315

## 4 3 1.5 -0.5007384 0.9496033

## 5 4 1.5 -0.3816447 0.8616167

## 6 5 1.5 -0.292554 0.7173205

## 7 3 2.0 -0.6609821 1.781236

## 8 4 2.0 -0.5061243 1.538961

## 9 5 2.0 -0.3953514 1.326648

## 10 3 2.5 -0.8513285 2.705087

## 11 4 2.5 -0.6122964 2.467789

## 12 5 2.5 -0.4727481 2.014822

## 13 3 3.0 -0.9570253 4.124553

## 14 4 3.0 -0.7513177 3.201657

## 15 5 3.0 -0.567449 2.9167675.4 Exercise - The Hot Hand

Now that you know the basics of running a simulation study in R, it's time to re-visit the Hot Hand example introduced above. Let shots be a sequence of shots made by a basketball player, Liz. In R, shots is stored as a vector of zeros and ones. If Liz has a "hot hand" then her chance of making her next shot should be higher when her last few shots were a success. This means we should expect ones to become more likely after a streak of ones in the vector shots. If Liz doesn't have a hot hand, then she should be no more likely to make her next shot after a streak of successes on her last few shots.

Before Miller & Sanjurjo (2018), researchers studying the hot hand operationalized this intuition as follows. Define a "streak" as \(k\) successes in a row. For each streak in shots, look at the shot that immediately follows it. Then collect each of these shots following a streak into another vector after_streak. Finally, compare the proportion of successes in shots to that in after_streak. Since each vector contains zeros and ones, we can make this comparison using mean(). If there's no hot hand, it seems reasonable to expect that mean(after_streak) will approximately equal to mean(shots). If there is a hot hand, on the other hand, then it seems reasonable to expect that mean(after_streak) should be larger than mean(shots).

In this simulation study, rather than using a vector shots of a real basketball player, we'll draw a random sequence of zeros and ones. Since we control how the sequence is generated, this gives us a way of checking whether the procedure described in the preceding paragraph works as expected. To keep things simple, we'll set \(k = 3\) and generate shots as an iid sequence of Bernoulli(p) draws. Such a sequence clearly does not exhibit a hot hand. Moreover, there's no need to calculate mean(shots) because we know that the chance of making each simulated shot is p. This means that the exercise reduces to comparing mean(after_streak) to p. For example, if p is 0.5, then we compare mean(after_streak) to 0.5.

- Write a function called

get_avg_after_streak()that takes a single input argument:shotsa vector of zeros and ones. Your function should work as follows. First, create an empty vector of the same length asshotscalledafter_streak. Second, set each element ofafter_streaktoTRUEorFALSEdepending on whether the corresponding element ofshotsfollows a streak of three ones (TRUE) or not (FALSE). Third, extract all the elements ofshotsfor whichafter_streakis true and calculate the proportion of these elements that equal one. Test your function on the vectorc(0, 1, 1, 1, 1, 0, 0, 0)to make sure that it's working correctly.

For each element of shots we need to decide whether to set the corresponding element of after_streak to TRUE or FALSE. Regardless of the values that they take, the first three elements of shots cannot possibly follow a streak: there aren't three shots that precede them! This means that the first three elements of after_streak should always be set to FALSE. All that remains is to examine elements 4, 5, ..., n of shots. The easiest way to do this is with a for loop. For a given element i of shots, where i is between 4 and n, think about how to extract the three elements of shots that precede element i. Once you've extracted them, there are various ways to test whether all three equal one. The easiest way is to use the R function all(). If you find yourself getting confused, try working through the logic of the for loop by hand in the example scores <- c(0, 1, 1, 1, 1, 0, 0, 0).

get_avg_after_streak <- function(shots) {

# shots should be a vector of 0 and 1; if not STOP!

stopifnot(all(shots %in% c(0, 1)))

n <- length(shots)

after_streak <- rep(NA, n) # Empty vector of length n

# The first 3 elements of shots by definition cannot

# follow a streak

after_streak[1:3] <- FALSE

# Loop over the remaining elements of shots

for(i in 4:n) {

# Extract the 3 shots that precede shot i

prev_three_shots <- shots[(i - 3):(i - 1)]

# Are all three of the preceding shots equal to 1?

# (TRUE/FALSE)

after_streak[i] <- all(prev_three_shots == 1)

}

# shots[after_streak] extracts all elements of shots

# for which after_streak is TRUE. Taking the mean of

# these is the same as calculating the prop. of ones

mean(shots[after_streak])

}

get_avg_after_streak(c(0, 1, 1, 1, 1, 0, 0, 0))## [1] 0.5- Write a function called

draw_shots()that simulates an iid sequence of Bernoulli draws representing basketball shots, where1denotes a success and0denotes a miss. Your function should take two arguments:n_shotsis the number of shots, andprob_successis the probability that any given shot will be a success. It should return a vector of lengthn_shotscontaining the simulation draws. Test your function by drawing a large sequence of shots withprob_successequal to 0.5 and calculating the sample mean.

draw_shots <- function(n_shots, prob_success) {

rbinom(n_shots, 1, prob_success)

}

set.seed(420508570)

mean(draw_shots(1e4, 0.5))## [1] 0.4993- Write a function called

get_hot_handedness()that combinesdraw_shots()andget_avg_after_streak()to calculated "hot handedness" of a randomly-drawn sequence. In words:get_est()should draw a sequenceshotsof simulated basketball shots and then calculate and return the fraction of successes insim_shotsamong the shots that immediately follow a streak of three successes. Think carefully about what argumentsget_est()needs to take if we want to be able to vary the length and success probability of our simulated sequence of basketball shots.

get_hot_handedness <- function(n_shots, prob_success) {

sim_shots <- draw_shots(n_shots, prob_success)

get_avg_after_streak(sim_shots)

}- Amos watches Liz take 100 basketball shots and calculates a test statistic \(T\) as follows: he identifies the shots that immediately follow a "streak" of three successful shots, and calculates Liz's success rate on this subset of shots. Amos argues as follows: "Suppose that Liz does not have a hot hand: each of her shots is independent of the others and her probability of success on a given shot is 1/2. Then the expected value of my \(T\) will be one half." Use

replicate()andget_hot_handedness()to carry out a simulation study with 10,000 replications to check whether Amos is correct.

It's possible, though unlikely, that get_hot_handedness() will return an NaN. This happens when the sequence of 100 simulated basketball shots contains no streaks of length three. We need to ignore any such simulation draws. The easiest way to do this is by using the na.rm of mean() to TRUE.

Amos is wrong: the expected value of his test statistic is approximately 0.46 rather than 0.5. On average, he will conclude that Liz has a cold hand when in fact her shots are iid. This approach is biased against finding evidence of a hot hand, if it exists:

set.seed(420508570)

sims <- replicate(1e4, get_hot_handedness(100, 0.5))

mean(sims, na.rm = TRUE) ## [1] 0.4651983- Use

Map()andexpand.grid()to repeat the preceding exercise over a grid of values for the number of shots that Liz takes,n_shots, and her probability of success,prob_success. In particular, considern_shotsin \(\{50, 100, 200\}\) andprob_successin \(\{0.4, 0.5, 0.6\}\). Usedo.call()andcbind()to summarize your results in a simple table. What conclusions do you draw?

Before using Map() and expand.grid() you'll need to create a function that can carry out the simulation study for fixed values of n_shots and prob_success. Call it get_test_stat_avg(). In broad strokes, you can base it on the function get_bias() that I explained earlier in the lesson. Just remember that our goal in this example is to calculate the mean of Amos' test statistic over simulation replications rather than its bias.

# Function to carry out the simulation study for a fixed

# combination of values for n_shots and prob_success

get_test_stat_avg <- function(n_shots, prob_success, n_reps = 5e3) {

draw_sim_replication <- function() {

sim_test_stat <- get_hot_handedness(n_shots, prob_success)

}

sim_replications <- replicate(n_reps, draw_sim_replication())

mean(sim_replications, na.rm = TRUE)

}

# Construct a grid of values for n_shots and

# prob_success

n_shots_grid <- c(50, 100, 200)

prob_success_grid <- c(0.4, 0.5, 0.6)

parameters <- expand.grid(n_shots = n_shots_grid,

prob_success = prob_success_grid)

# Carry out the simulation study at each combination

# of parameter values

set.seed(420508570)

test_stat_avgs <- Map(get_test_stat_avg,

n_shots = parameters$n_shots,

prob_success = parameters$prob_success)

# Store the results in a simple table and

# display them

test_stat_avgs <- do.call(rbind, test_stat_avgs)

results <- cbind(parameters, test_stat_avgs)

results## n_shots prob_success test_stat_avgs

## 1 50 0.4 0.2934501

## 2 100 0.4 0.3376670

## 3 200 0.4 0.3705452

## 4 50 0.5 0.4256395

## 5 100 0.5 0.4582441

## 6 200 0.5 0.4810223

## 7 50 0.6 0.5491750

## 8 100 0.6 0.5804618

## 9 200 0.6 0.5892968For every combination of n_shots and prob_success, Amos' test statistic appears to be biased downwards: test_stat_avgs is always smaller than prob_success. For a fixed value of prob_success, the bias appears to be more severe when n_shots is smaller.

Bonus Question: Repeat the preceding exercise for different definitions of a streak, e.g. two successes in a row or four successes in a row. Think about which functions you'll need to change and how. How do your results change?

Bonus Question: The exercises above consider a simulated basketball player who does not have a hot hand. Design a new simulation with a player who does exhibit a hot hand and see how this affects the results. (There are many ways to set up a simulation with dependence between trials, so there are many possible answers to this question.)

See for example this write-up of Lantis & Nessen (2021) in the February 2022 NBER digest.↩︎

An ungated version of Benjamin (2019) is available here.↩︎

See Miller & Sanjurjo (2019) for a more accessible explanation that connects to several related probability puzzles.↩︎

Technically, "random" draws made on a computer are only pseudorandom. We'll discuss this further below.↩︎

For less common distributions, see CRAN Task View: Probability Distributions↩︎

A Bernoulli trial is a model for a possibly biased coin flip: if \(X \sim \text{Bernoulli}(p)\) then \(\mathbb{P}(X=1) = p\) and \(\mathbb{P}(X=0) = 1-p\).↩︎

To see this, first rewrite \(\sum_{i=1}^n (X_i - \bar{X}_n)^2\) as \(\sum_{i=1}^n (X_i - \mu)^2 - n(\bar{X}_n - \mu)^2\). This step is just algebra. Then take expectations, using the fact that the \(X_i\) are independent and identically distributed.↩︎

If you're unfamiliar with the Normal Q-Q plot that I used to check the normality of

test_sims, you can read about it this blog post.↩︎